Post Stastics

- This post has 1910 words.

- Estimated read time is 9.10 minute(s).

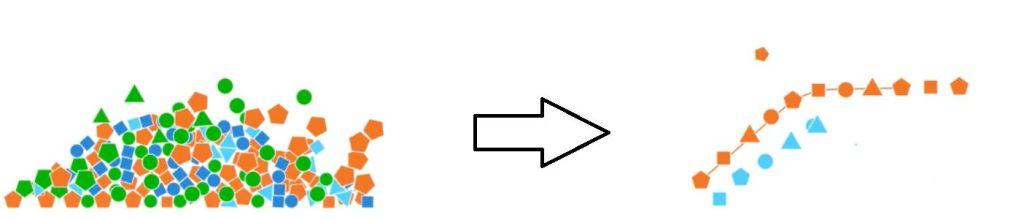

In the digital age, data has emerged as a new currency, fueling the operations of countless web platforms and applications. However, the raw data collected from various sources is often messy, inconsistent, and unstructured. This is where data wrangling comes into play – the process of transforming, cleaning, and preparing raw data into a usable format, unlocking its true potential for analysis, visualization, and application development.

What is Data Wrangling?

Data wrangling, also known as data munging or data preprocessing, is the process of cleaning, structuring, and transforming raw data into a format that is more suitable for analysis, visualization, and decision-making. It involves tasks such as handling missing values, removing duplicates, standardizing formats, and combining data from multiple sources.

At its core, data wrangling aims to make data more accessible and meaningful. This process enables data scientists, analysts, and developers to work with high-quality data, facilitating accurate insights and informed decision-making.

Commercial Tools for Data Wrangling:

There are several commercial tools available for data wrangling that offer advanced features, user-friendly interfaces, and support for large-scale data processing. These tools are designed to streamline the data preparation process and make it more accessible to a broader range of users, including business analysts, data scientists, and non-technical professionals. Here are some popular commercial data wrangling tools:

- Trifacta: Trifacta Wrangler is a widely-used commercial tool that provides a user-friendly interface for data wrangling tasks. It offers features such as data profiling, data cleaning, transformation, and enrichment. Trifacta’s visual interface allows users to interactively explore and transform data, making it suitable for both technical and non-technical users.

- Alteryx: Alteryx is a comprehensive platform that includes data preparation, blending, and advanced analytics capabilities. It offers a visual workflow designer that enables users to build data pipelines for cleansing, transforming, and integrating data from various sources. Alteryx also supports predictive analytics and spatial analysis.

- Tamr: Tamr focuses on data mastering and unifying data from disparate sources. It uses machine learning and human expertise to automate the process of data categorization, cleaning, and integration. Tamr is particularly useful for organizations dealing with complex data integration challenges.

- Paxata: Paxata, now part of DataRobot, is another popular commercial data preparation tool. It offers an intuitive interface for data profiling, cleaning, and transformation. Paxata’s smart algorithms help users automatically detect and fix data quality issues.

- Talend: Talend provides a data integration and transformation platform that covers a wide range of data-related tasks, including data wrangling. It offers both open-source and commercial versions, with visual design tools for building data pipelines, data mapping, and transformation.

- IBM InfoSphere DataStage: This tool by IBM offers data integration and transformation capabilities, including data cleansing, transformation, and integration. It supports both structured and unstructured data and can be used for large-scale data processing.

- Domo: Domo is a business intelligence platform that includes data preparation and visualization features. It allows users to connect to various data sources, cleanse and transform data, and create interactive dashboards and reports.

- Qlik Data Preparation: Qlik offers a data preparation tool that allows users to cleanse, transform, and enrich data from various sources. It integrates with other Qlik products for data visualization and analytics.

These commercial data wrangling tools often provide additional features such as collaboration, data governance, and integration with other data-related technologies. They are suitable for organizations that require robust data preparation capabilities and are willing to invest in specialized tools to streamline their data workflows. However, it’s important to evaluate the specific needs of your organization and the features offered by each tool before making a decision.

Tools for Data Wrangling in Python:

Several tools and libraries have emerged to simplify the data wrangling process. Among the most popular are:

- Pandas: A powerful Python library that provides data structures and functions for efficiently manipulating and analyzing data. Pandas offers a wide range of features for handling data, including data cleaning, transformation, aggregation, and merging.

- NumPy: While not specifically designed for data wrangling, NumPy is a fundamental library for numerical computations in Python. It provides support for arrays, matrices, and mathematical functions, which are often used in data manipulation tasks.

- OpenRefine: An open-source tool that specializes in cleaning and transforming messy data. OpenRefine offers an interactive interface for exploring, cleaning, and reconciling inconsistencies in data.

- Trifacta Wrangler: A user-friendly tool that enables users to visually explore and clean data through a web interface. Trifacta Wrangler automates many aspects of data cleaning and transformation.

- DataWrangler: Developed by Stanford, DataWrangler is a web-based tool that facilitates the transformation of raw data through a series of interactive steps.

Non-Python Tools:

If you don’t use Python or are looking to speed up your data wrangling but, don’t want to invest in proprietary applications or services, then look no further than the Linux/Unix utilities. *nix provides nearly all the data processing capabilities you could ever need, right out-of-the-box! Below is a list of common (and some not so common) Linux/Unix utilities for text and data processing:

- grep: Searches for patterns in text files, useful for finding specific strings of text within files.

- sed (Stream Editor): Processes text streams, allowing for text manipulation like search and replace, insertion, and deletion.

- awk: Processes text files and extracts/manipulates data, especially structured data with columns.

- cut: Removes sections from lines of files, often used for extracting columns from delimited text files.

- sort: Sorts lines of text files, arranging data in a specified order.

- uniq: Filters out duplicate lines from sorted text input, aiding in finding unique items.

- tr (Translate): Translates or deletes characters from input streams, facilitating simple text transformations.

- paste: Merges lines from multiple files side by side, often used to combine data sets.

- join: Combines lines from two files based on a common field, similar to a database join operation.

- split: Divides a file into smaller parts, useful for processing large files.

- wc (Word Count): Counts the number of lines, words, and characters in a file, providing basic statistics.

- head: Displays the beginning lines of a file, often used to preview data.

- tail: Displays the ending lines of a file, useful for observing the last parts of a file.

- comm: Compares two sorted files line by line, identifying common and unique lines.

- diff: Compares two text files and displays differences, highlighting changes.

- patch: Applies changes generated by the ‘diff’ command to another file, useful for version control and updates.

If you are unfamiliar with any of these utilities and do data wrangling on a regular bases, I strongly suggest you look into these utilities. At least get familiar with them as tasks can often be completed using these tools is less time than it take to fire up your IDE.

Methods for Data Wrangling:

Data wrangling encompasses a range of techniques to convert raw data into usable information. Some common methods include:

Data Cleaning: This involves identifying and correcting errors in the dataset, such as dealing with missing values, outliers, and inconsistencies. For example, using Pandas in Python, you can remove rows with missing values as follows:

import pandas as pd

# Load the dataset

data = pd.read_csv('data.csv')

# Drop rows with missing values

cleaned_data = data.dropna()

2 / 2

Data Wrangling: Unveiling the Hidden Power of Data for Web and Applications

In the digital age, data has emerged as a new currency, fueling the operations of countless web platforms and applications. However, the raw data collected from various sources is often messy, inconsistent, and unstructured. This is where data wrangling comes into play – the process of transforming, cleaning, and preparing raw data into a usable format, unlocking its true potential for analysis, visualization, and application development.

What is Data Wrangling?

Data wrangling, also known as data munging or data preprocessing, is the process of cleaning, structuring, and transforming raw data into a format that is more suitable for analysis, visualization, and decision-making. It involves tasks such as handling missing values, removing duplicates, standardizing formats, and combining data from multiple sources.

At its core, data wrangling aims to make data more accessible and meaningful. This process enables data scientists, analysts, and developers to work with high-quality data, facilitating accurate insights and informed decision-making.

Tools for Data Wrangling:

Several tools and libraries have emerged to simplify the data wrangling process. Among the most popular are:

- Pandas: A powerful Python library that provides data structures and functions for efficiently manipulating and analyzing data. Pandas offers a wide range of features for handling data, including data cleaning, transformation, aggregation, and merging.

- NumPy: While not specifically designed for data wrangling, NumPy is a fundamental library for numerical computations in Python. It provides support for arrays, matrices, and mathematical functions, which are often used in data manipulation tasks.

- OpenRefine: An open-source tool that specializes in cleaning and transforming messy data. OpenRefine offers an interactive interface for exploring, cleaning, and reconciling inconsistencies in data.

- Trifacta Wrangler: A user-friendly tool that enables users to visually explore and clean data through a web interface. Trifacta Wrangler automates many aspects of data cleaning and transformation.

- DataWrangler: Developed by Stanford, DataWrangler is a web-based tool that facilitates the transformation of raw data through a series of interactive steps.

Methods for Data Wrangling:

Data wrangling encompasses a range of techniques to convert raw data into usable information. Some common methods include:

- Data Cleaning: This involves identifying and correcting errors in the dataset, such as dealing with missing values, outliers, and inconsistencies. For example, using Pandas in Python, you can remove rows with missing values as follows:

import pandas as pd

# Load the dataset

data = pd.read_csv('data.csv')

# Drop rows with missing values

cleaned_data = data.dropna()

Data Transformation: This step involves converting data into a suitable format. It might include converting categorical data into numerical values, scaling features, and applying mathematical transformations. Using NumPy, you can normalize a dataset as follows:

import numpy as np # Normalize data normalized_data = (data - data.min()) / (data.max() - data.min())

Data Aggregation: Aggregating data involves summarizing information to a higher level. This is often used to create meaningful insights from large datasets. Pandas allows for easy aggregation, like computing the mean of a column:

# Compute the mean of a column mean_value = data['column_name'].mean()

Data Merging: When working with multiple datasets, merging is crucial to combine relevant information. Pandas offers functions to merge datasets based on common columns:

# Merge two datasets based on a common column merged_data = pd.merge(data1, data2, on='common_column')

Data’s Role in the Web and Applications:

Data is the lifeblood of the modern web and applications. From recommendation systems on e-commerce platforms to personalized content on social media, data drives user experiences. Through effective data wrangling, organizations can leverage data to create more accurate insights, predictive models, and improved user interactions.

In web development, data wrangling ensures that the information presented to users is reliable and relevant. For instance, travel booking websites rely on data from various sources to display accurate flight prices, hotel availability, and travel options. Without data wrangling, inconsistencies in data could lead to inaccurate bookings and dissatisfied users.

In conclusion, data wrangling plays a pivotal role in harnessing the power of data for web platforms and applications. It transforms raw, messy data into valuable insights that drive decision-making and enhance user experiences. With the aid of tools like Pandas and NumPy, data professionals can efficiently navigate the challenges of data cleaning, transformation, and aggregation, paving the way for a data-driven digital landscape.